This article is a translation of Japanese ver.

日本語版はこちら*1

Hi, this is chang.

Today, I introduce my personal opinion about AI: Learning big data does not make AI smarter.

0. What is Big data?

Wikipedia shows that "big data is a field that treats ways to analyze, systematically extract information from, or otherwise deal with data sets that are too large or complex to be dealt with by traditional data-processing application software*2." I guess it was about 10 years ago when people started to use the word.

If the size of data is huge, it is difficult to understand its whole contents. For example, I heard that there are 129,864,880 of the books in the world*3. No one can read all of them. About 400 years ago, Rene Descartes already said: "No one can understand all the knowledge exiting in the world. Only god can do that." Sorry for my poor translation.

In view of books, paper was the first innovative technology for realizing big data. Human memory often become vague or vanish in a long run. On the other hand, the information recorded on paper remains the same. For the last several decades, hard disc and internet were newly invented. They made the size of information bigger and bigger.

The ability of human brain is limited. The huge information of which the size is always far beyond the human ability exists in real. I think it cause some kinds of fear. In fact, I feel it is difficult to follow the new words and concepts generated in daily life. When I read the newspaper, I check the overviews like "Oh, cloud is awesome!" but in many case I do not try to understand deeply. I feel the endless. I just became old..? Also in the society, many people, especially the young, make use of internet search rather than study with books.

That's because AI attracts people's attentions. When they try to judge something, it is difficult to check all the related information and find the neutral answer. So the help of AI is required.

1. Data set: image of dinosaur

I picked Google's image search as the example of big data. Dinosaurs, that is my favorite creature, is today's material. I developed the deep learning for judging the type of dinosaurs.

I gathered images with the following keywords:

- tyrannosaurus

- triceratops

- brachiosaurus

- stegosaurus

- iguanodon

- ornithomimus

- pteranodon

The below is the part of the images. I pixelated the images to avoid copyright issues.

Dinosaur image:

Academic illustrations are most, but skeleton photographs, stuffed toys, CD jackets etc. are also included.

2. Learning

From each type of dinosaur images, 50 images were randomly selected as test data. Next, 100 images were randomly selected from the remaining 400 images for learning data. The number of training images was 700 (100x7), and that of the test images was 350 (50x7).

The network structure was almost the same to mnist samples. It took a long to read images because of the number and bytes of RGB images. So I used c language to load images.

The inference accuracy is shown:

| Type | Accuracy[%] |

|---|---|

| Tyrannosaurus | 32.0 |

| Triceratops | 24.0 |

| brachiosaurus | 40.0 |

| stegosaurus | 32.0 |

| iguanodon | 32.0 |

| ornithomimus | 16.0 |

| pteranodon | 10.0 |

The average of the 7 types was 26.7%.

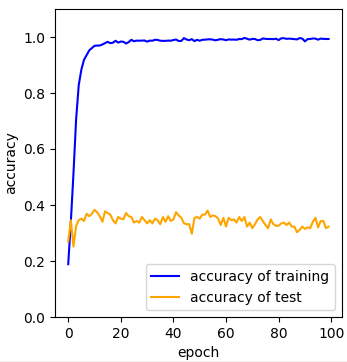

The cause of the poor accuracy is clear. Looking at the learning transition, there is a typical overfitting: the accuracy increased for the learning data but not for the test data.

Learning transition:

The accuracy increased for training data, but not for test data.

3. Improvement A: Increasing data

In order to improve the inference accuracy, I tried to increase the number of learning data. 200 more images had been added to the learning data. The number of learning images was 2100 (300 x 7).

I showed the accuracy obtained using the enlarged training data and the same source codes.

| Type | Accuracy[%] |

|---|---|

| Tyrannosaurus | 48.0 |

| Triceratops | 28.0 |

| brachiosaurus | 34.0 |

| stegosaurus | 42.0 |

| iguanodon | 32.0 |

| ornithomimus | 28.0 |

| pteranodon | 10.0 |

The accuracy was 31.7% and did not change much.

The overfitting was observed in the learning transition as well as paragraph 2.

Learning transition:

Even if the training data was increased, the accuracy for the test data was not improved.

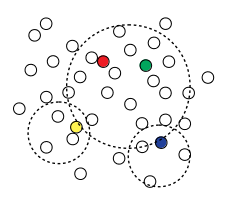

Due to the nature of statistics, if the population contains variation, sample contains the same variation no matter from where it is extracted. If you increase the number of images from 100 to 300, the variation does not decrease. Therefore, increasing training data does not necessarily improve the accuracy of deep learning.

Image of picking a certain sample from the population

It contains the same variation no matter from where it is extracted.

4. Improvement B: Reduce the variation of image

The result of paragraph 3 shows that t is necessary to reduce the variation of learning data to improve the accuracy. It's hard to check hundreds of images one by one. So, today I used some techniques.

Google's image search finds images that are well-known and frequently viewed at the top of the search. Please looking at the images along the ranking. You can see that many academic images are at the top and minor images like stuffed toys are at the bottom. Therefore, I tried to use the top 50 images as test data and the following 300 images as learning data. This makes it possible to minimize the variation in the test data.

This is the obtained accuracy with the reconfigured test data.

| Type | Accuracy[%] |

|---|---|

| Tyrannosaurus | 38.0 |

| Triceratops | 34.0 |

| brachiosaurus | 60.0 |

| stegosaurus | 68.0 |

| iguanodon | 28.0 |

| ornithomimus | 46.0 |

| pteranodon | 32.0 |

The accuracy was improved to 43.7%. I think it is a good value considering it was obtained from raw images of Google's image search.

Learning transition

Overfitting was reduced a little

5. Consideration

(1) Characteristics of big data

First of all, I would like to say that the deep learning I wrote today took a quite rough approach. Images have so much variation, for example, face photographs, full-body photographs, and group photographs. There are also variations in shooting angles. In general, it is in appropriate to learn them together. A good example is the classification of persons. As introduced in many cases*4*5, first the face part is extracted from the image and then classified. In other words, they use the two types of AI. In short, images with just a keyword "dinosaurs" are inappropriate to acquire the high accuracy.

I dared to adopt this method because the purpose of today is not to pursue the accuracy of deep learning. From the result of paragraph 2, it was found that neural network does not become smart no matter how much the scattered data is learned. But big data is often scattered. There is nothing good about inputting big data into AI if the data were gathered with no clear intention. This is the today's topic.

To put it in detail, variation has variation in it. For example, if Google's image search finds only academic illustrations, skeleton photographs, and stuffed toys, increasing data is possible to improve the accuracy. But the minor images like CD jackets, plastic models, deformed illustrations tends to be found near the bottom ranking. I think it is the reason why the accuracy does not improve even if the data is increased.

I hear a salesman who deals cloud-based services say something like "store log and do deep learning in the future." You shouldn't trust it. I think the problem is that "big data" and "AI" are individually featured and their performances are egocentrically pursued. Data and algorithm should be matched from the beginning. It is important to collect data with a clear strategy about the analysis and result to be obtained. Otherwise, the data will be wasted. No matter how much you collect. In the first place, the applicable field of deep learning is very narrow. In my opinion, image processing is the only chance. The salesman's talk symbolizes the unjustified divergence between data and algorithms.

Japanese government says it will subsidize the businesses in big data and AI. It shows that the fields are not easy to expect profits without subsidies. I guess in a few years many people will understand that learning big data produce only predictable results. That will be the end of AI boom.

(2) AI for AI → endless loop

In paragraph 4, I used the characteristic of Google's image search, that assigns famous images to the top ranking. So to speak, I made use of another AI to improve the accuracy of deep learning.

If you want to improve the accuracy further, it is recommended to add AI to whiten the background other than the dinosaurs. It is also a option to pick only academic illustrations before classification. Introducing other AI to make AI smarter... this will be an endless loop. Sooner or later, humans must manually prepare images.

There was a reason for selecting dinosaurs as today's material. Dinosaurs have died in ancient times, so no perfect images for them exist. In addition, the shapes of dinosaurs have been changed as researches progressed. In my childhood, it was common for Tyrannosaurus to stand upright like Godzilla. Nowadays, it is common for Tyrannosaurus to walk with its tail extended and keep its body horizontal. Recently, feathered dinosaurs are trendy. The material "dinosaur", which makes learning difficult due to its unique ambiguity, was perfect for today's trial.

Connecting multiple AIs to confront big data is a way. But I think this is not only way.

(3) AI does not become GOD

I'm not saying there is no point in using data or AI. As I wrote in a past article, deep learning is a very powerful algorithm for image processing. I think learning composite photos*6 is one of the ways to utilize deep learning.

In general, I think it is important to clarify the purpose and make use of data in simple way. AI will never become GOD as Descartes called. AI will never generate an idea beyond human imaginations. In fact, Amazon's recommendations is very simple itself, isn't it?

6. Afterword

It was fun to write what I always wanted to. In the near future, I will try to classify dinosaurs using composite images.

Today's source codes is here*7. As I wrote in README, if you have troubles to prepare data, please download from here*8.

*1:https://changlikesdesktop.hatenablog.com/entry/2020/09/18/163520

*2:"https://ja.wikipedia.org/wiki/%E3%83%93%E3%83%83%E3%82%B0%E3%83%87%E3%83%BC%E3%82%BF

*3:http://booksearch.blogspot.com/2010/08/books-of-world-stand-up-and-be-counted.html

*4:https://qiita.com/yottyann1221/items/a08300b572206075ee9f

*5:https://ai-coordinator.jp/face-recognition

*6:https://changlikesdesktop.hatenablog.com/entry/2020/07/02/070321

*7:https://github.com/changGitHubJ/dinasour

*8:https://drive.google.com/file/d/17exNvgD2nvPTOZ-iObMcXrOp90_7duVA/view?usp=sharing